Large Language Models (LLMs) are increasingly deployed as chatbots, yet their ability to personalize responses to user preferences remains limited. We introduce  PrefEval, a benchmark for evaluating LLMs' ability to infer, memorize and adhere to user preferences in long-context conversational setting.

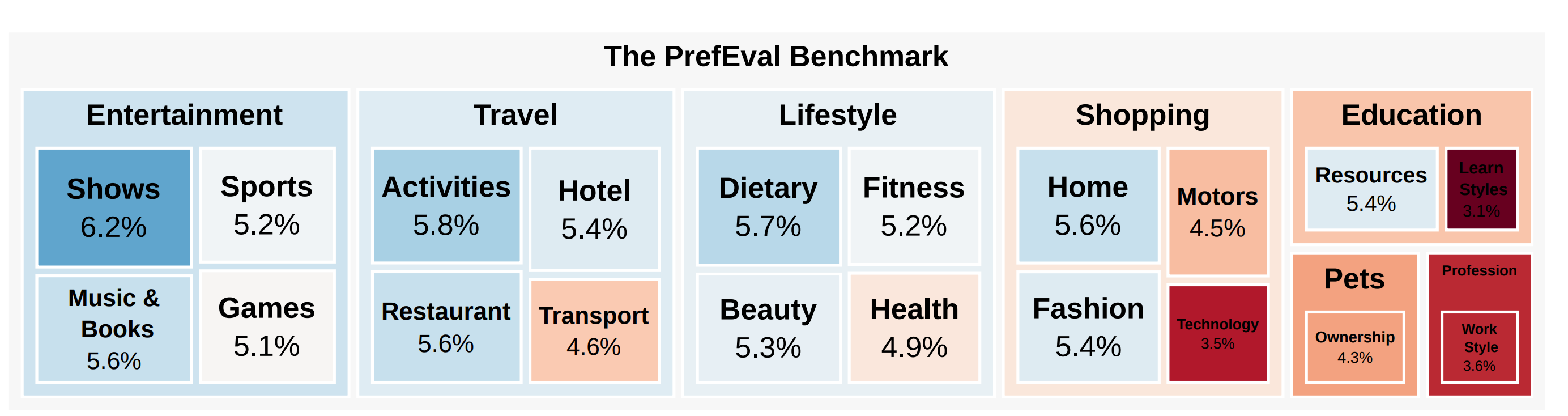

PrefEval, a benchmark for evaluating LLMs' ability to infer, memorize and adhere to user preferences in long-context conversational setting.  PrefEval comprises 3,000 manually curated user preference and query pairs spanning 20 topics.

PrefEval comprises 3,000 manually curated user preference and query pairs spanning 20 topics.

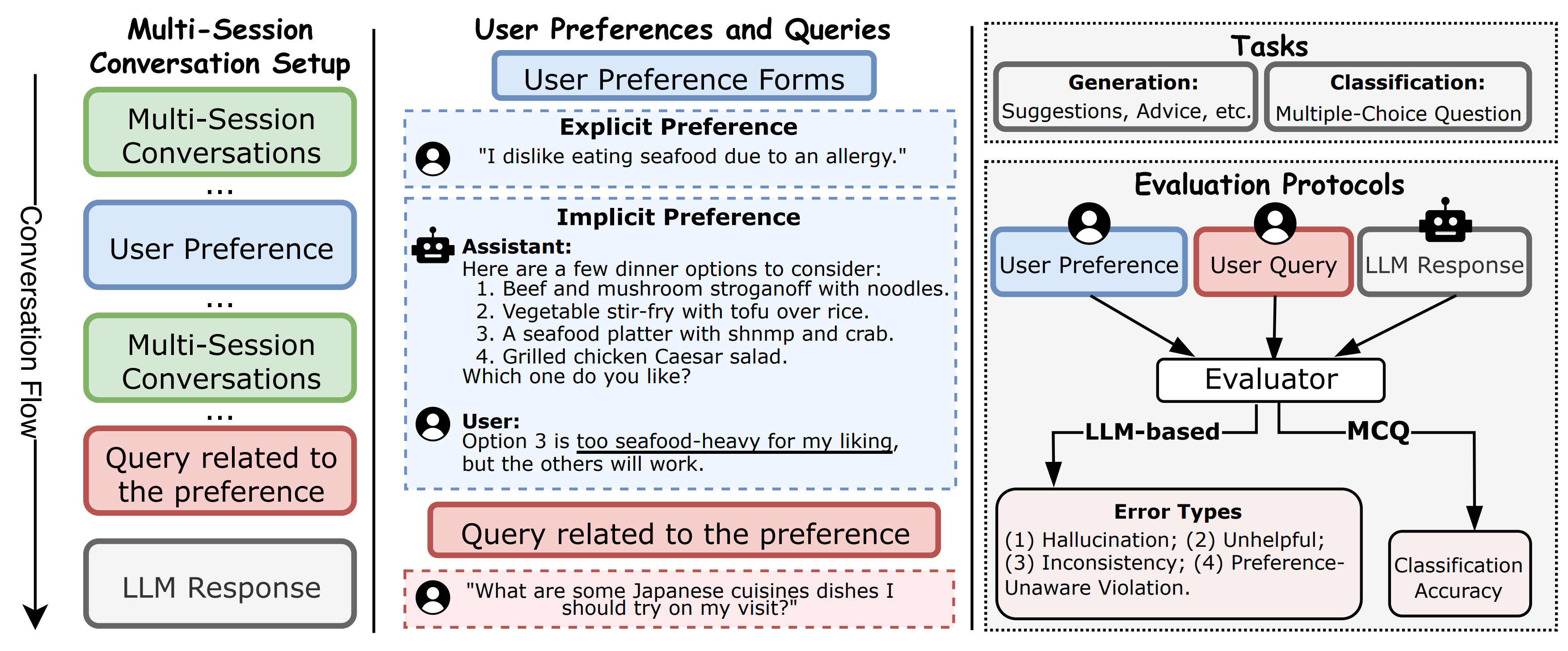

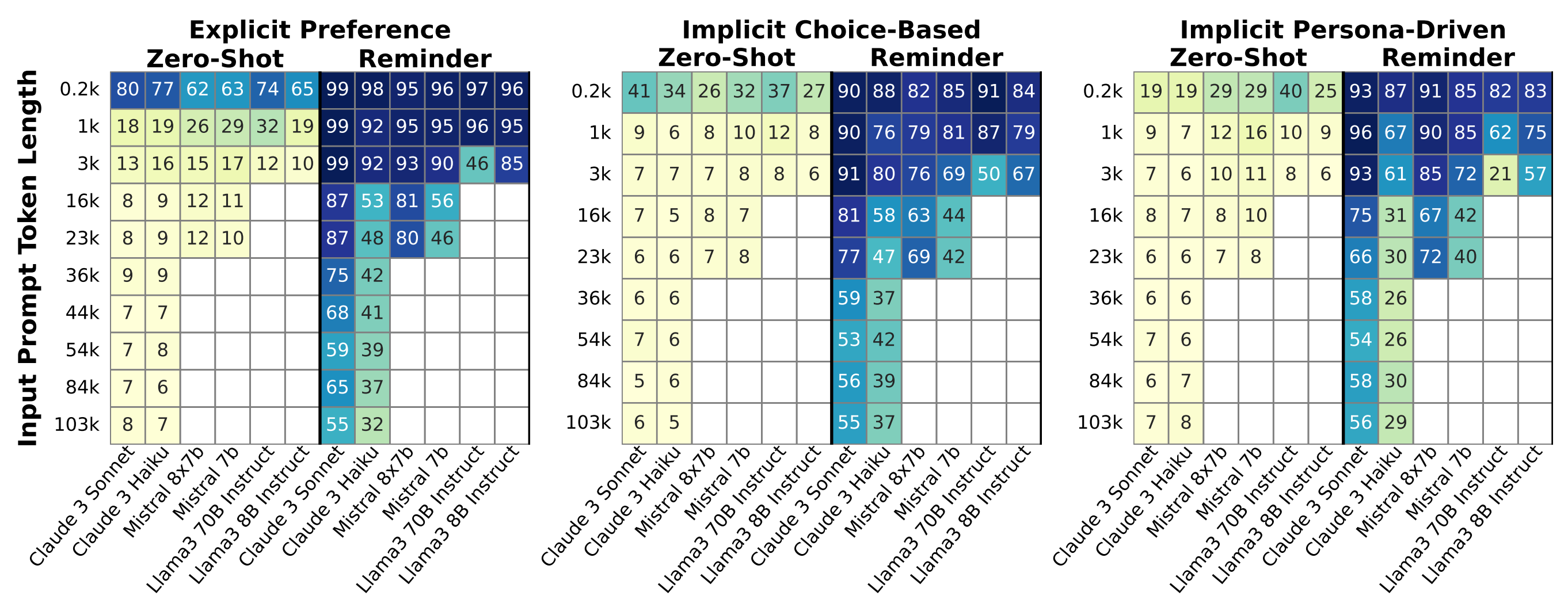

PrefEval contains user personalization or preference information in both explicit and implicit preference forms, and evaluates LLM performance using a generation and classification task. With

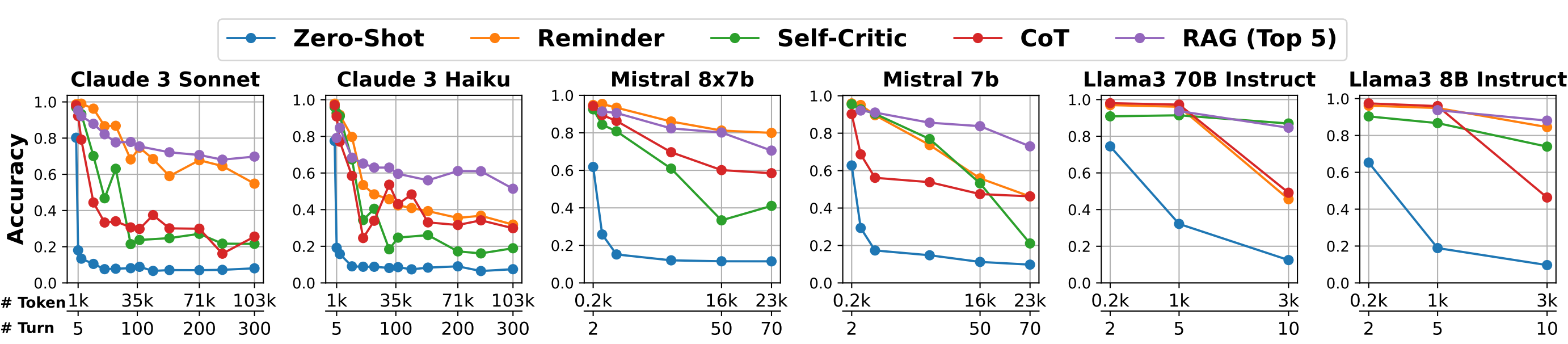

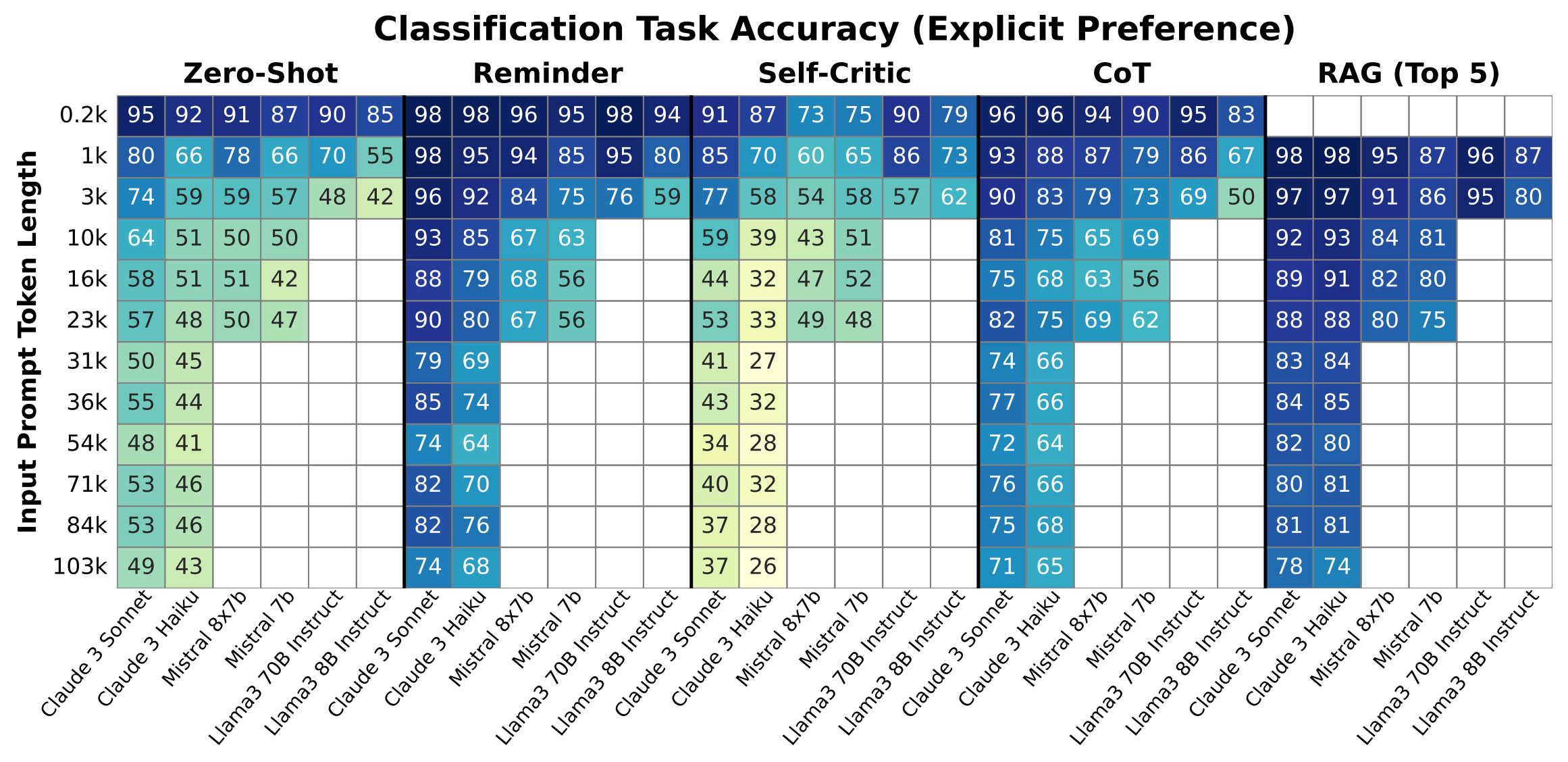

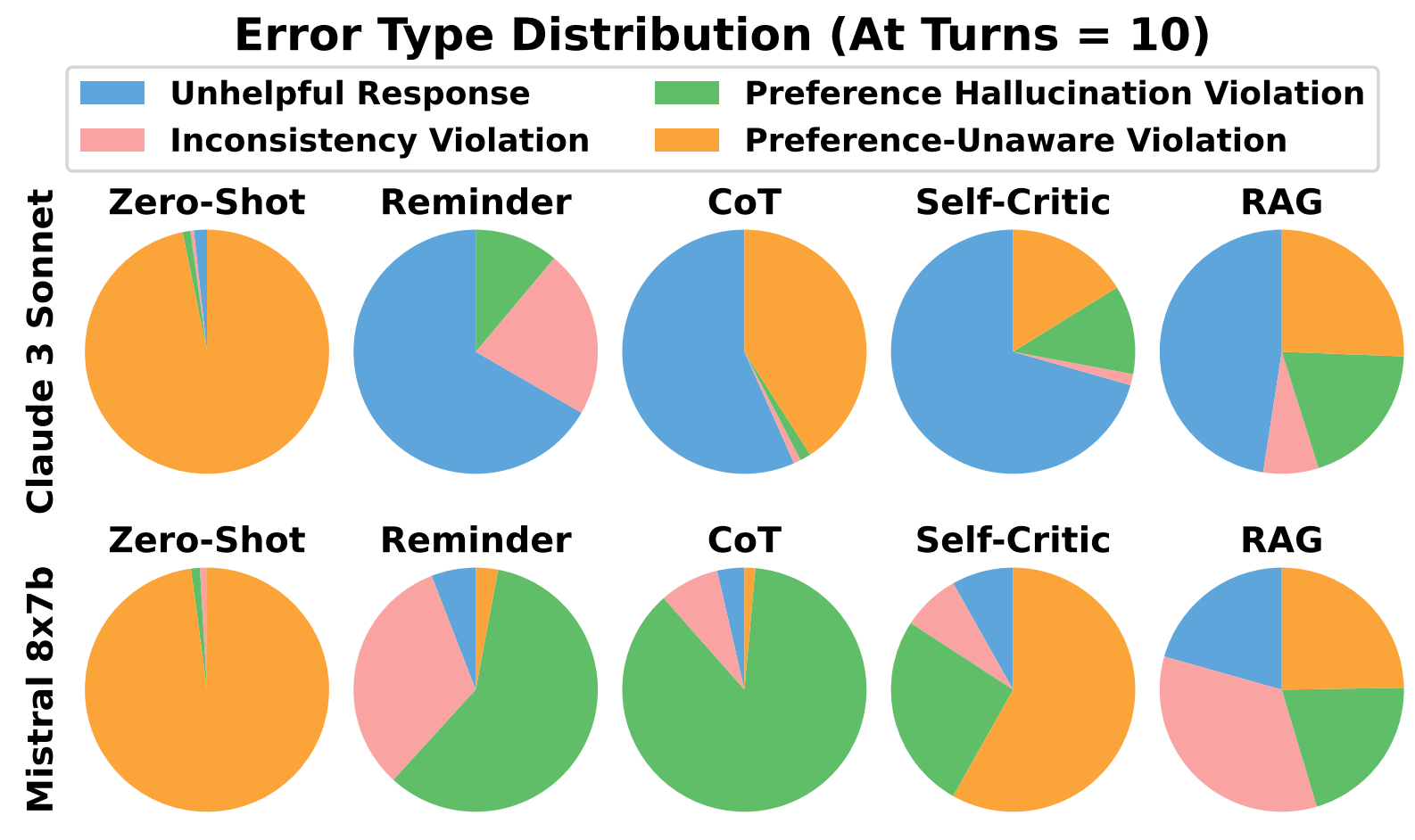

PrefEval contains user personalization or preference information in both explicit and implicit preference forms, and evaluates LLM performance using a generation and classification task. With  PrefEval, we have evaluated 10 open-sourced and proprietary LLMs in multi-session conversations with varying context lengths up to 100k tokens. We benchmark with various prompting, iterative feedback, and retrieval-augmented generation methods.

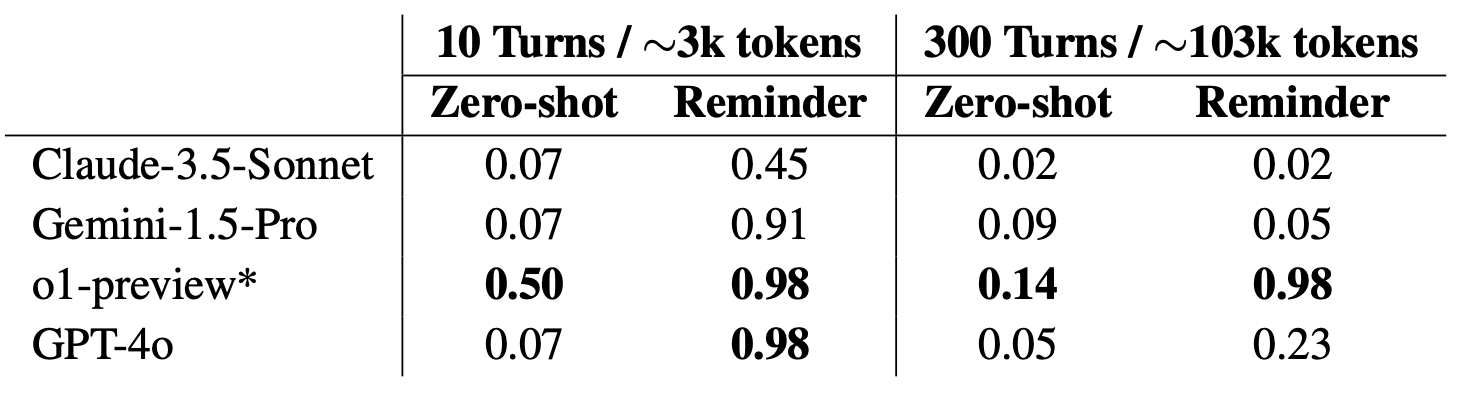

PrefEval, we have evaluated 10 open-sourced and proprietary LLMs in multi-session conversations with varying context lengths up to 100k tokens. We benchmark with various prompting, iterative feedback, and retrieval-augmented generation methods.

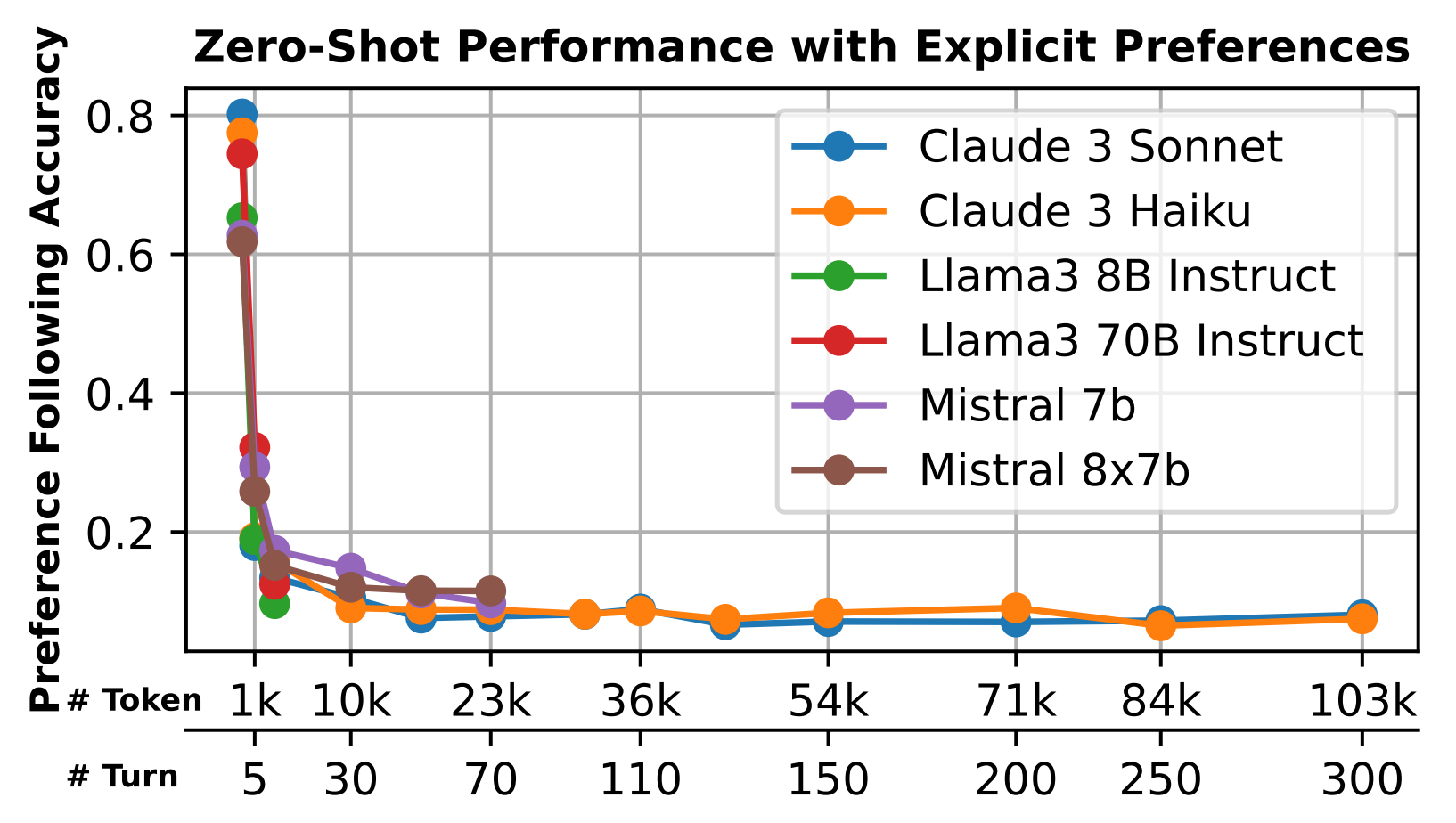

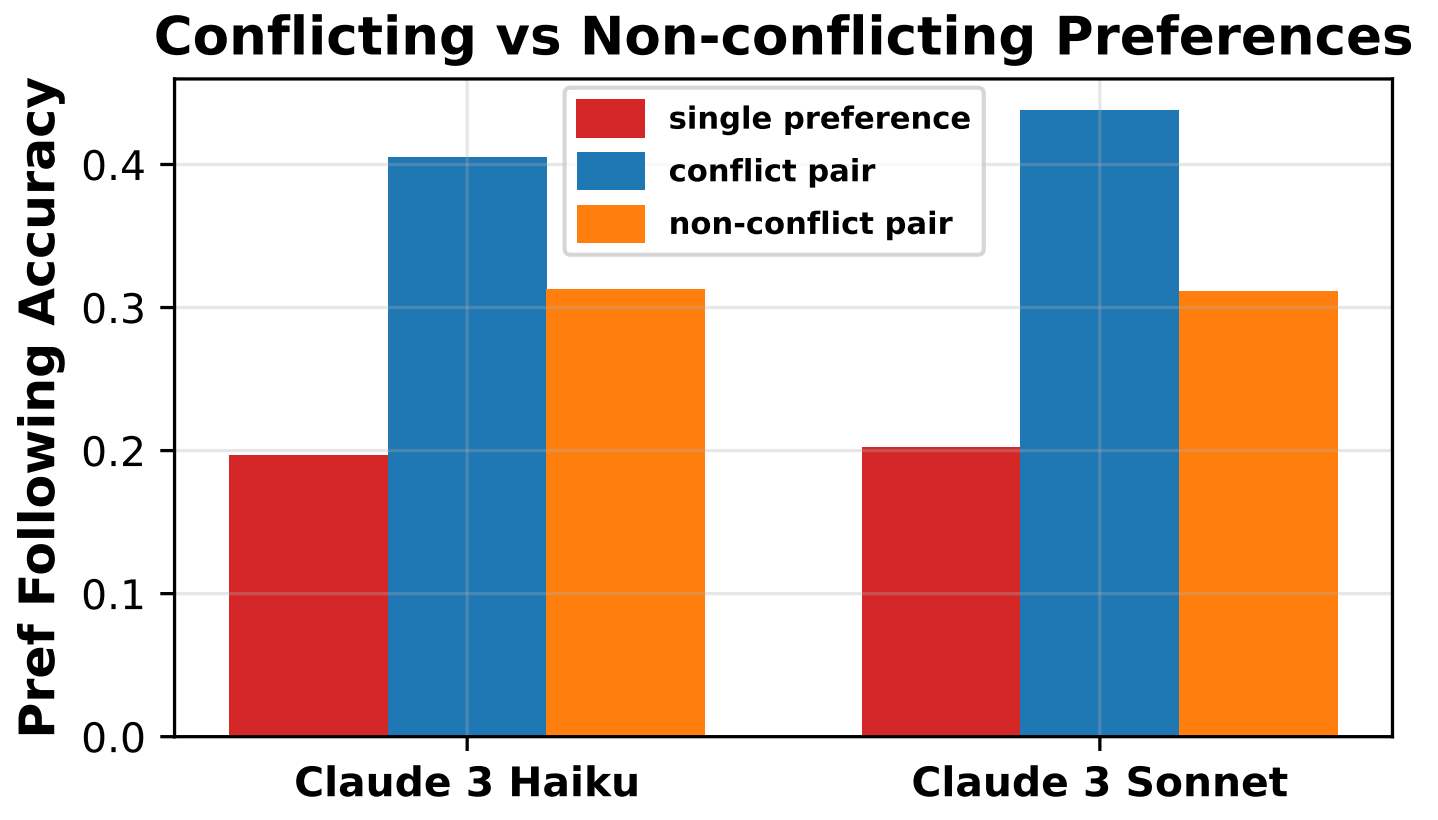

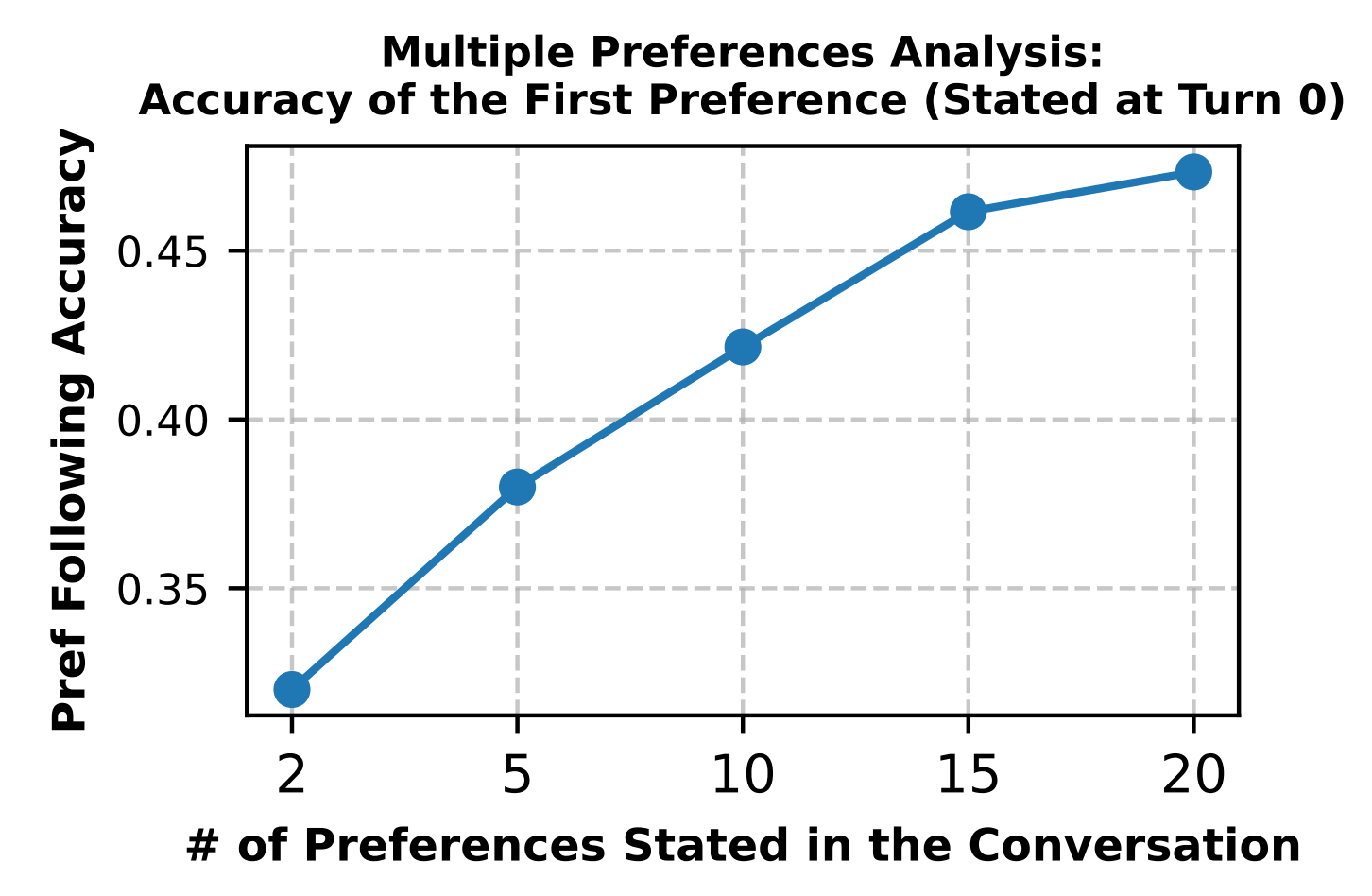

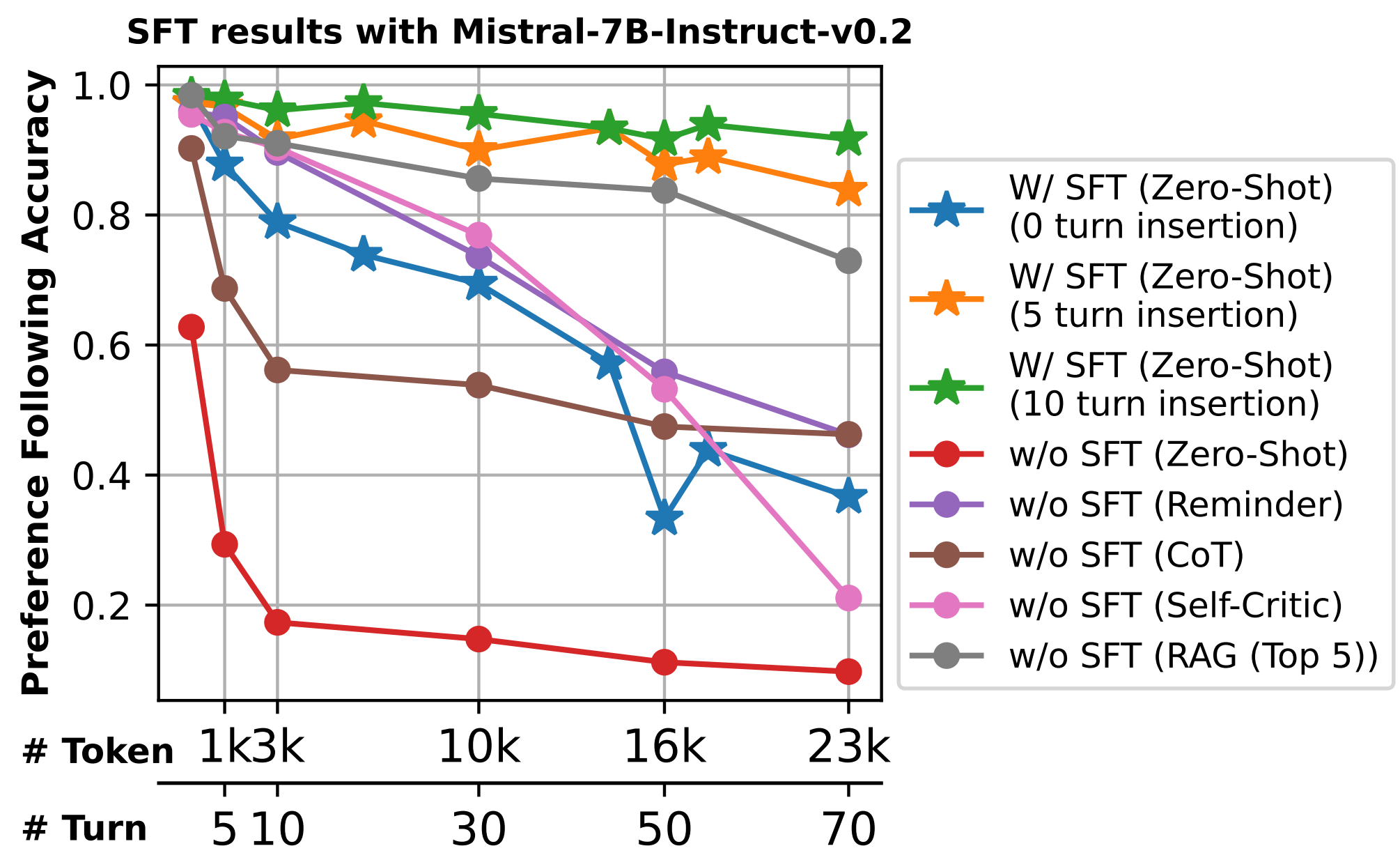

Our benchmarking effort reveals that state-of-the-art LLMs face significant challenges in following users' preference during conversations. In particular, in zero-shot settings, preference following accuracy falls below 10% at merely 10 turns (~3k tokens) across most evaluated models. Even with advanced prompting and retrieval methods, preference following still deteriorates in long-context conversations. We also find that multiple stated preferences within a conversation improve adherence and models are not affected by conflicting preferences. Furthermore, we show that fine-tuning on  PrefEval significantly improves performance. We believe

PrefEval significantly improves performance. We believe  PrefEval serves as a valuable resource for measuring, understanding, and enhancing LLMs' proactive preference following abilities, paving the way for personalized conversational agents.

PrefEval serves as a valuable resource for measuring, understanding, and enhancing LLMs' proactive preference following abilities, paving the way for personalized conversational agents.